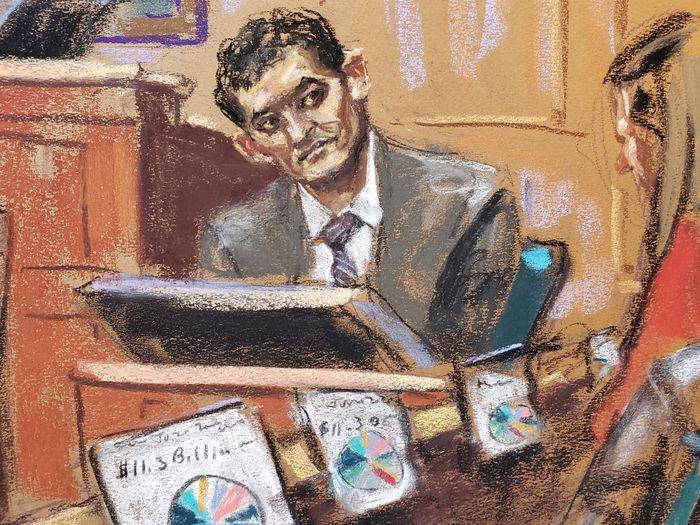

Sam Bankman-Fried’s Lawyers Seek to Regain Ground in FTX Trial

Founder looks to rebound from cross-examination, with closing arguments expected to begin Wednesday

Sam Bankman-Fried’s lawyers rested their case Tuesday after seeking to rehabilitate the FTX founder’s credibility from the prosecutors’ two-day grilling.

Bankman-Fried, dressed in a grey suit, floundered through the end of Assistant U.S. Attorney Danielle Sassoon’s cross-examination.

For a second day, Sassoon walked Bankman-Fried through balance sheets, communications and tweets, again highlighting inconsistencies—or what she portrayed as outright lies—between the defendant’s public statements and his private knowledge.

Bankman-Fried repeatedly told jurors he couldn’t recall many of his past statements. He said he couldn’t remember the exact time line of things.

Defence attorney Mark Cohen sought to elicit testimony to explain his client’s evasiveness. He asked about reasons for his foggy memory and his use of a private jet and his contempt for regulation.

“You used the phrase ‘f— regulators,’ ” Cohen said, referring to a series of messages between Bankman-Fried and a Vox reporter. “Was that the full extent of the chain?”

It wasn’t, said Bankman-Fried, adding that he felt that his efforts to work with regulators might have only led to more bad regulation. “I was somewhat frustrated,” he said.

Cohen asked about the huge amount of evidence in the case—suggesting his client couldn’t possibly remember every document—and his many media interviews.

Bankman-Fried told the jury he talked to about 50 reporters during the time between FTX’s collapse and his arrest, typically preparing between 30 seconds and an hour for each interview. When he testified before Congress, others helped him prepare his testimony, he said.

Bankman-Fried’s testimony, which formed the bulk of his defence team’s presentation, is likely crucial to jurors’ determination of whether to find him guilty of fraud and other charges. Closing arguments are scheduled for Wednesday, clearing the way for the jury to likely get the case on Thursday.

About half of the jurors watched Bankman-Fried as he spoke. Some scribbled notes and others gazed at the floor. One man closed his eyes. Damian Williams, the Manhattan U.S. attorney who has given priority to prosecuting cryptocurrency cases, sat in the front row of the courtroom gallery.

Bankman-Fried again answered some of the prosecutor’s questions by quibbling with their premise. When asked about an $8 billion hole in the balance sheet of Alameda Research, FTX’s sister hedge fund, he said that “hole” wasn’t the word he would use. He said he couldn’t speak with exact confidence about whether some FTX customers, outside of its sister hedge fund, had special privileges.

Sassoon asked if it was Bankman-Fried’s practice to maximise making money even with the risk of going bust. “It depends on the context,” he replied. He later added, “With respect to some of them, yes.”

Sassoon concluded her cross-examination by playing a recording of a Nov. 9, 2022, all-hands meeting in which Caroline Ellison, the former chief executive of Alameda Research and Bankman-Fried’s former girlfriend, spoke with Alameda staffers. Ellison, her voice halting, said she had talked about Alameda’s use of customer funds with Bankman-Fried and two of his top deputies, Nishad Singh and Gary Wang.

“Ms. Ellison identified you, Gary and Nishad as her co-conspirators, correct?” Sassoon asked.

Sassoon showed jurors a document, from Dec. 25, 2022, in which Bankman-Fried appeared to be analysing his own potential legal jeopardy and assessing how the government viewed the alleged conspiracy. While it was public that Ellison and Wang were cooperating with prosecutors, Bankman-Fried wasn’t sure if Singh, a former FTX executive, would be charged.

“They don’t seem to be keeping a seat warm for him as a defendant,” the document said.

“You wrote that, Mr. Bankman-Fried?” asked Sassoon. “I think so,” he said.

Singh, who later pleaded guilty, testified for the government earlier in the trial.

Later, Bankman-Fried’s lawyer referenced a photograph of Bankman-Fried on a private jet, reclining with his eyes closed. The prosecution had showed the jury the photo as an example of excess spending. Cohen asked Bankman-Fried if he remembered the photo.

“A very flattering one,” Bankman-Fried said sarcastically, before agreeing that using a private jet was a valid business expense.

“It was very logistically difficult to travel between the Bahamas and a few places, chiefly Washington, D.C.,” the FTX founder told the jury.

After the defence attorney wrapped up, Sassoon told the judge she had no more questions. Bankman-Fried took a long swig from his water bottle as he stepped down for his final time from the witness stand.

Copyright 2020, Dow Jones & Company, Inc. All Rights Reserved Worldwide. LEARN MORE

Copyright 2020, Dow Jones & Company, Inc. All Rights Reserved Worldwide. LEARN MORE

This stylish family home combines a classic palette and finishes with a flexible floorplan

Just 55 minutes from Sydney, make this your creative getaway located in the majestic Hawkesbury region.

Continued stagflation and cost of living pressures are causing couples to think twice about starting a family, new data has revealed, with long term impacts expected

Australia is in the midst of a ‘baby recession’ with preliminary estimates showing the number of births in 2023 fell by more than four percent to the lowest level since 2006, according to KPMG. The consultancy firm says this reflects the impact of cost-of-living pressures on the feasibility of younger Australians starting a family.

KPMG estimates that 289,100 babies were born in 2023. This compares to 300,684 babies in 2022 and 309,996 in 2021, according to the Australian Bureau of Statistics (ABS). KPMG urban economist Terry Rawnsley said weak economic growth often leads to a reduced number of births. In 2023, ABS data shows gross domestic product (GDP) fell to 1.5 percent. Despite the population growing by 2.5 percent in 2023, GDP on a per capita basis went into negative territory, down one percent over the 12 months.

“Birth rates provide insight into long-term population growth as well as the current confidence of Australian families,” said Mr Rawnsley. “We haven’t seen such a sharp drop in births in Australia since the period of economic stagflation in the 1970s, which coincided with the initial widespread adoption of the contraceptive pill.”

Mr Rawnsley said many Australian couples delayed starting a family while the pandemic played out in 2020. The number of births fell from 305,832 in 2019 to 294,369 in 2020. Then in 2021, strong employment and vast amounts of stimulus money, along with high household savings due to lockdowns, gave couples better financial means to have a baby. This led to a rebound in births.

However, the re-opening of the global economy in 2022 led to soaring inflation. By the start of 2023, the Australian consumer price index (CPI) had risen to its highest level since 1990 at 7.8 percent per annum. By that stage, the Reserve Bank had already commenced an aggressive rate-hiking strategy to fight inflation and had raised the cash rate every month between May and December 2022.

Five more rate hikes during 2023 put further pressure on couples with mortgages and put the brakes on family formation. “This combination of the pandemic and rapid economic changes explains the spike and subsequent sharp decline in birth rates we have observed over the past four years,” Mr Rawnsley said.

The impact of high costs of living on couples’ decision to have a baby is highlighted in births data for the capital cities. KPMG estimates there were 60,860 births in Sydney in 2023, down 8.6 percent from 2019. There were 56,270 births in Melbourne, down 7.3 percent. In Perth, there were 25,020 births, down 6 percent, while in Brisbane there were 30,250 births, down 4.3 percent. Canberra was the only capital city where there was no fall in the number of births in 2023 compared to 2019.

“CPI growth in Canberra has been slightly subdued compared to that in other major cities, and the economic outlook has remained strong,” Mr Rawnsley said. “This means families have not been hurting as much as those in other capital cities, and in turn, we’ve seen a stabilisation of births in the ACT.”

This stylish family home combines a classic palette and finishes with a flexible floorplan

Just 55 minutes from Sydney, make this your creative getaway located in the majestic Hawkesbury region.