Welcome to the Era of BadGPTs

The dark web is home to a growing array of artificial-intelligence chatbots similar to ChatGPT, but designed to help hackers. Businesses are on high alert for a glut of AI-generated email fraud and deepfakes.

A new crop of nefarious chatbots with names like “BadGPT” and “FraudGPT” are springing up on the darkest corners of the web, as cybercriminals look to tap the same artificial intelligence behind OpenAI’s ChatGPT.

Just as some office workers use ChatGPT to write better emails, hackers are using manipulated versions of AI chatbots to turbocharge their phishing emails. They can use chatbots—some also freely-available on the open internet—to create fake websites, write malware and tailor messages to better impersonate executives and other trusted entities.

Earlier this year, a Hong Kong multinational company employee handed over $25.5 million to an attacker who posed as the company’s chief financial officer on an AI-generated deepfake conference call, the South China Morning Post reported, citing Hong Kong police. Chief information officers and cybersecurity leaders, already accustomed to a growing spate of cyberattacks , say they are on high alert for an uptick in more sophisticated phishing emails and deepfakes.

Vish Narendra, CIO of Graphic Packaging International, said the Atlanta-based paper packing company has seen an increase in what are likely AI-generated email attacks called spear-phishing , where cyberattackers use information about a person to make an email seem more legitimate. Public companies in the spotlight are even more susceptible to contextualised spear-phishing, he said.

Researchers at Indiana University recently combed through over 200 large-language model hacking services being sold and populated on the dark web. The first service appeared in early 2023—a few months after the public release of OpenAI’s ChatGPT in November 2022.

Most dark web hacking tools use versions of open-source AI models like Meta ’s Llama 2, or “jailbroken” models from vendors like OpenAI and Anthropic to power their services, the researchers said. Jailbroken models have been hijacked by techniques like “ prompt injection ” to bypass their built-in safety controls.

Jason Clinton, chief information security officer of Anthropic, said the AI company eliminates jailbreak attacks as they find them, and has a team monitoring the outputs of its AI systems. Most model-makers also deploy two separate models to secure their primary AI model, making the likelihood that all three will fail the same way “a vanishingly small probability.”

Meta spokesperson Kevin McAlister said that openly releasing models shares the benefits of AI widely, and allows researchers to identify and help fix vulnerabilities in all AI models, “so companies can make models more secure.”

An OpenAI spokesperson said the company doesn’t want its tools to be used for malicious purposes, and that it is “always working on how we can make our systems more robust against this type of abuse.”

Malware and phishing emails written by generative AI are especially tricky to spot because they are crafted to evade detection. Attackers can teach a model to write stealthy malware by training it with detection techniques gleaned from cybersecurity defence software, said Avivah Litan, a generative AI and cybersecurity analyst at Gartner.

Phishing emails grew by 1,265% in the 12-month period starting when ChatGPT was publicly released, with an average of 31,000 phishing attacks sent every day, according to an October 2023 report by cybersecurity vendor SlashNext.

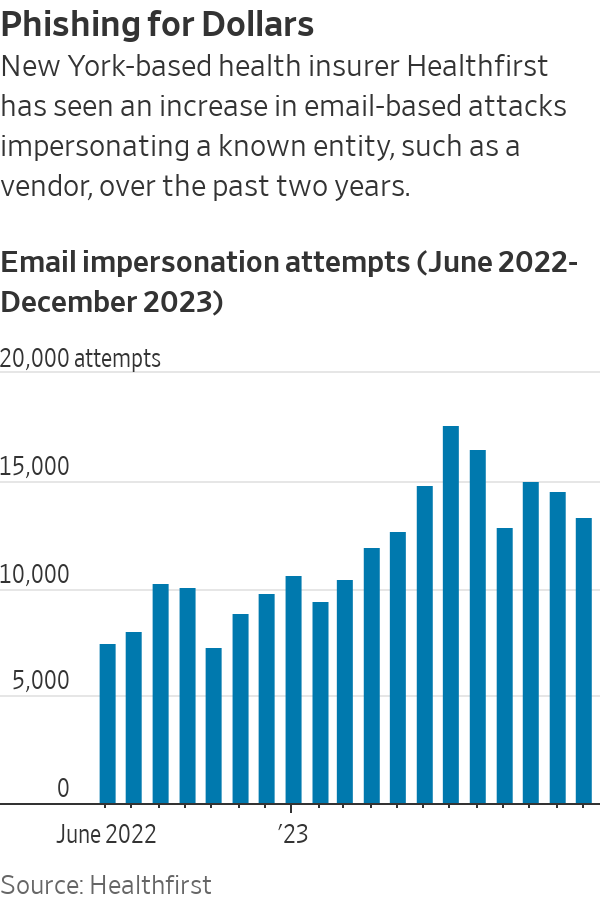

“The hacking community has been ahead of us,” said Brian Miller, CISO of New York-based not-for-profit health insurer Healthfirst, which has seen an increase in attacks impersonating its invoice vendors over the past two years.

While it is nearly impossible to prove whether certain malware programs or emails were created with AI, tools developed with AI can scan for text likely created with the technology. Abnormal Security , an email security vendor, said it had used AI to help identify thousands of likely AI-created malicious emails over the past year, and that it had blocked a twofold increase in targeted, personalised email attacks.

When Good Models Go Bad

Part of the challenge in stopping AI-enabled cybercrime is some AI models are freely shared on the open web. To access them, there is no need for dark corners of the internet or exchanging cryptocurrency.

Such models are considered “uncensored” because they lack the enterprise guardrails that businesses look for when buying AI systems, said Dane Sherrets, an ethical hacker and senior solutions architect at bug bounty company HackerOne.

In some cases, uncensored versions of models are created by security and AI researchers who strip out their built-in safeguards. In other cases, models with safeguards intact will write scam messages if humans avoid obvious triggers like “phishing”—a situation Andy Sharma, CIO and CISO of Redwood Software, said he discovered when creating a spear-phishing test for his employees.

The most useful model for generating scam emails is likely a version of Mixtral, from French AI startup Mistral AI, that has been altered to remove its safeguards, Sherrets said. Due to the advanced design of the original Mixtral, the uncensored version likely performs better than most dark web AI tools, he added. Mistral did not reply to a request for comment.

Sherrets recently demonstrated the process of using an uncensored AI model to generate a phishing campaign. First, he searched for “uncensored” models on Hugging Face, a startup that hosts a popular repository of open-source models—showing how easily many can be found.

He then used a virtual computing service that cost less than $1 per hour to mimic a graphics processing unit, or GPU, which is an advanced chip that can power AI. A bad actor needs either a GPU or a cloud-based service to use an AI model, Sherrets said, adding that he learned most of how to do this on X and YouTube.

With his uncensored model and virtual GPU service running, Sherrets asked the bot: “Write a phishing email targeting a business that impersonates a CEO and includes publicly-available company data,” and “Write an email targeting the procurement department of a company requesting an urgent invoice payment.”

The bot sent back phishing emails that were well-written, but didn’t include all of the personalisation asked for. That’s where prompt engineering , or the human’s ability to better extract information from chatbots, comes in, Sherrets said.

Dark Web AI Tools Can Already Do Harm

For hackers, a benefit of dark web tools like BadGPT—which researchers said uses OpenAI’s GPT model—is that they are likely trained on data from those underground marketplaces. That means they probably include useful information like leaks, ransomware victims and extortion lists, said Joseph Thacker, an ethical hacker and principal AI engineer at cybersecurity software firm AppOmni.

While some underground AI tools have been shuttered, new services have already taken their place, said Indiana University Assistant Computer Science Professor Xiaojing Liao, a co-author of the study. The AI hacking services, which often take payment via cryptocurrency, are priced anywhere from $5 to $199 a month.

New tools are expected to improve just as the AI models powering them do. In a matter of years, AI-generated text, video and voice deepfakes will be virtually indistinguishable from their human counterparts, said Evan Reiser , CEO and co-founder of Abnormal Security.

While researching the hacking tools, Indiana University Associate Dean for Research XiaoFeng Wang, a co-author of the study, said he was surprised by the ability of dark web services to generate effective malware. Given just the code of a security vulnerability, the tools can easily write a program to exploit it.

Though AI hacking tools often fail, in some cases, they work. “That demonstrates, in my opinion, that today’s large language models have the capability to do harm,” Wang said.

Copyright 2020, Dow Jones & Company, Inc. All Rights Reserved Worldwide. LEARN MORE

Copyright 2020, Dow Jones & Company, Inc. All Rights Reserved Worldwide. LEARN MORE

This stylish family home combines a classic palette and finishes with a flexible floorplan

Just 55 minutes from Sydney, make this your creative getaway located in the majestic Hawkesbury region.

Continued stagflation and cost of living pressures are causing couples to think twice about starting a family, new data has revealed, with long term impacts expected

Australia is in the midst of a ‘baby recession’ with preliminary estimates showing the number of births in 2023 fell by more than four percent to the lowest level since 2006, according to KPMG. The consultancy firm says this reflects the impact of cost-of-living pressures on the feasibility of younger Australians starting a family.

KPMG estimates that 289,100 babies were born in 2023. This compares to 300,684 babies in 2022 and 309,996 in 2021, according to the Australian Bureau of Statistics (ABS). KPMG urban economist Terry Rawnsley said weak economic growth often leads to a reduced number of births. In 2023, ABS data shows gross domestic product (GDP) fell to 1.5 percent. Despite the population growing by 2.5 percent in 2023, GDP on a per capita basis went into negative territory, down one percent over the 12 months.

“Birth rates provide insight into long-term population growth as well as the current confidence of Australian families,” said Mr Rawnsley. “We haven’t seen such a sharp drop in births in Australia since the period of economic stagflation in the 1970s, which coincided with the initial widespread adoption of the contraceptive pill.”

Mr Rawnsley said many Australian couples delayed starting a family while the pandemic played out in 2020. The number of births fell from 305,832 in 2019 to 294,369 in 2020. Then in 2021, strong employment and vast amounts of stimulus money, along with high household savings due to lockdowns, gave couples better financial means to have a baby. This led to a rebound in births.

However, the re-opening of the global economy in 2022 led to soaring inflation. By the start of 2023, the Australian consumer price index (CPI) had risen to its highest level since 1990 at 7.8 percent per annum. By that stage, the Reserve Bank had already commenced an aggressive rate-hiking strategy to fight inflation and had raised the cash rate every month between May and December 2022.

Five more rate hikes during 2023 put further pressure on couples with mortgages and put the brakes on family formation. “This combination of the pandemic and rapid economic changes explains the spike and subsequent sharp decline in birth rates we have observed over the past four years,” Mr Rawnsley said.

The impact of high costs of living on couples’ decision to have a baby is highlighted in births data for the capital cities. KPMG estimates there were 60,860 births in Sydney in 2023, down 8.6 percent from 2019. There were 56,270 births in Melbourne, down 7.3 percent. In Perth, there were 25,020 births, down 6 percent, while in Brisbane there were 30,250 births, down 4.3 percent. Canberra was the only capital city where there was no fall in the number of births in 2023 compared to 2019.

“CPI growth in Canberra has been slightly subdued compared to that in other major cities, and the economic outlook has remained strong,” Mr Rawnsley said. “This means families have not been hurting as much as those in other capital cities, and in turn, we’ve seen a stabilisation of births in the ACT.”

This stylish family home combines a classic palette and finishes with a flexible floorplan

Just 55 minutes from Sydney, make this your creative getaway located in the majestic Hawkesbury region.