Can Artificial Intelligence Replace Human Therapists?

Three experts discuss the promise—and problems—of relying on algorithms for our mental health.

Could artificial intelligence reduce the need for human therapists?

Websites, smartphone apps and social-media sites are dispensing mental-health advice, often using artificial intelligence. Meanwhile, clinicians and researchers are looking to AI to help define mental illness more objectively, identify high-risk people and ensure quality of care.

Some experts believe AI can make treatment more accessible and affordable. There has long been a severe shortage of mental-health professionals, and since the Covid pandemic, the need for support is greater than ever. For instance, users can have conversations with AI-powered chatbots, allowing then to get help anytime, anywhere, often for less money than traditional therapy.

The algorithms underpinning these endeavours learn by combing through large amounts of data generated from social-media posts, smartphone data, electronic health records, therapy-session transcripts, brain scans and other sources to identify patterns that are difficult for humans to discern.

Despite the promise, there are some big concerns. The efficacy of some products is questionable, a problem only made worse by the fact that private companies don’t always share information about how their AI works. Problems about accuracy raise concerns about amplifying bad advice to people who may be vulnerable or incapable of critical thinking, as well as fears of perpetuating racial or cultural biases. Concerns also persist about private information being shared in unexpected ways or with unintended parties.

The Wall Street Journal hosted a conversation via email and Google Doc about these issues with John Torous, director of the digital-psychiatry division at Beth Israel Deaconess Medical Center and assistant professor at Harvard Medical School; Adam Miner, an instructor at the Stanford School of Medicine; and Zac Imel, professor and director of clinical training at the University of Utah and co-founder of LYSSN.io, a company using AI to evaluate psychotherapy. Here’s an edited transcript of the discussion.

Leaps forward

WSJ: What is the most exciting way AI and machine learning are being used to diagnose mental disorders and improve treatments?

DR. MINER: AI can speed up access to appropriate services, like crisis response. The current Covid pandemic is a strong example where we see both the potential for AI to help facilitate access and triage, while also bringing up privacy and misinformation risks. This challenge—deciding which interventions and information to champion—is an issue in both pandemics and in mental health care, where we have many different treatments for many different problems.

DR. IMEL: In the near term, I am most excited about using AI to augment or guide therapists, such as giving feedback after the session or even providing tools to support self-reflection. Passive phone-sensing apps [that run in the background on users’ phones and attempt to monitor users’ moods] could be exciting if they predict later changes in depression and suggest interventions to do something early. Also, research on remote sensing in addiction, using tools to detect when a person might be at risk of relapse and suggesting an intervention or coping skills, is exciting.

DR. TOROUS: On a research front, AI can help us unlock some of the complexities of the brain and work toward understanding these illnesses better, which can help us offer new, effective treatment. We can generate a vast amount of data about the brain from genetics, neuroimaging, cognitive assessments and now even smartphone signals. We can utilize AI to find patterns that may help us unlock why people develop mental illness, who responds best to certain treatments and who may need help immediately. Using new data combined with AI will likely help us unlock the potential of creating new personalized and even preventive treatments.

WSJ: Do you think automated programs that use AI-driven chatbots are an alternative to therapy?

DR. TOROUS: In a recent paper I co-authored, we looked at the more recent chatbot literature to see what the evidence says about what they really do. Overall, it was clear that while the idea is exciting, we are not yet seeing evidence matching marketing claims. Many of the studies have problems. They are small. They are difficult to generalize to patients with mental illness. They look at feasibility outcomes instead of clinical-improvement endpoints. And many studies do not feature a control group to compare results.

DR. MINER: I don’t think it is an “us vs. them, human vs. AI” situation with chatbots. The important backdrop is that we, as a community, understand we have real access issues and some people might not be ready or able to get help from a human. If chatbots prove safe and effective, we could see a world where patients access treatment and decide if and when they want another person involved. Clinicians would be able to spend time where they are most useful and wanted.

WSJ: Are there cases where AI is more accurate or better than human psychologists, therapists or psychiatrists?

DR. IMEL: Right now, it’s pretty hard to imagine replacing human therapists. Conversational AI is not good at things we take for granted in human conversation, like remembering what was said 10 minutes ago or last week and responding appropriately.

DR. MINER: This is certainly where there is both excitement and frustration. I can’t remember what I had for lunch three days ago, and an AI system can recall all of Wikipedia in seconds. For raw processing power and memory, it isn’t even a contest between humans and AI systems. However, Dr. Imel’s point is crucial around conversations: Things humans do without effort in conversation are currently beyond the most powerful AI system.

An AI system that is always available and can hold thousands of simple conversations at the same time may create better access, but the quality of the conversations may suffer. This is why companies and researchers are looking at AI-human collaboration as a reasonable next step.

DR. IMEL: For example, studies show AI can help “rewrite” text statements to be more empathic. AI isn’t writing the statement, but trained to help a potential listener possibly tweak it.

WSJ: As the technology improves, do you see chatbots or smartphone apps siphoning off any patients who might otherwise seek help from therapists?

DR. TOROUS: As more people use apps as an introduction to care, it will likely increase awareness and interest of mental health and the demand for in-person care. I have not met a single therapist or psychiatrist who is worried about losing business to apps; rather, app companies are trying to hire more therapists and psychiatrists to meet the rising need for clinicians supporting apps.

DR. IMEL: Mental-health treatment has a lot in common with teaching. Yes, there are things technology can do in order to standardise skill building and increase access, but as parents have learned in the last year, there is no replacing what a teacher does. Humans are imperfect, we get tired and are inconsistent, but we are pretty good at connecting with other humans. The future of technology in mental health is not about replacing humans, it’s about supporting them.

WSJ: What about schools or companies using apps in situations when they might otherwise hire human therapists?

DR. MINER: One challenge we are facing is that the deployment of apps in schools and at work often lacks the rigorous evaluation we expect in other types of medical interventions. Because apps can be developed and deployed so quickly, and their content can change rapidly, prior approaches to quality assessment, such as multiyear randomized trials, are not feasible if we are to keep up with the volume and speed of app development.

Judgment calls

WSJ: Can AI be used for diagnoses and interventions?

DR. IMEL: I might be a bit of a downer here—building AI to replace current diagnostic practices in mental health is challenging. Determining if someone meets criteria for major depression right now is nothing like finding a tumour in a CT scan—something that is expensive, labour-intensive and prone to errors of attention, and where AI is already proving helpful. Depression is measured very well with a nine-question survey.

DR. MINER: I agree that diagnosis and treatment are so nuanced that AI has a long way to go before taking over those tasks from a human.

Through sensors, AI can measure symptoms, like sleep disturbances, pressured speech or other changes in behaviour. However, it is unclear if these measurements fully capture the nuance, judgment and context of human decision making. An AI system may capture a person’s voice and movement, which is likely related to a diagnosis like major depressive disorder. But without more context and judgment, crucial information can be left out. This is especially important when there are cultural differences that could account for diagnosis-relevant behaviour.

Ensuring new technologies are designed with awareness of cultural differences in normative language or behaviour is crucial to engender trust in groups who have been marginalised based on race, age, or other identities.

WSJ: Is privacy also a concern?

DR. MINER: We’ve developed laws over the years to protect mental-health conversations between humans. As apps or other services start asking to be a part of these conversations, users should be able to expect transparency about how their personal experiences will be used and shared.

DR. TOROUS: In prior research, our team identified smartphone apps [used for depression and smoking cessation that] shared data with commercial entities. This is a red flag that the industry needs to pause and change course. Without trust, it is not possible to offer effective mental health care.

DR. MINER: We undervalue and poorly design for trust in AI for healthcare, especially mental health. Medicine has designed processes and policies to engender trust, and AI systems are likely following different rules. The first step is to clarify what is important to patients and clinicians in terms of how information is captured and shared for sensitive disclosures.

Reprinted by permission of The Wall Street Journal, Copyright 2021 Dow Jones & Company. Inc. All Rights Reserved Worldwide. Original date of publication: March 27, 2021.

Copyright 2020, Dow Jones & Company, Inc. All Rights Reserved Worldwide. LEARN MORE

Copyright 2020, Dow Jones & Company, Inc. All Rights Reserved Worldwide. LEARN MORE

This stylish family home combines a classic palette and finishes with a flexible floorplan

Just 55 minutes from Sydney, make this your creative getaway located in the majestic Hawkesbury region.

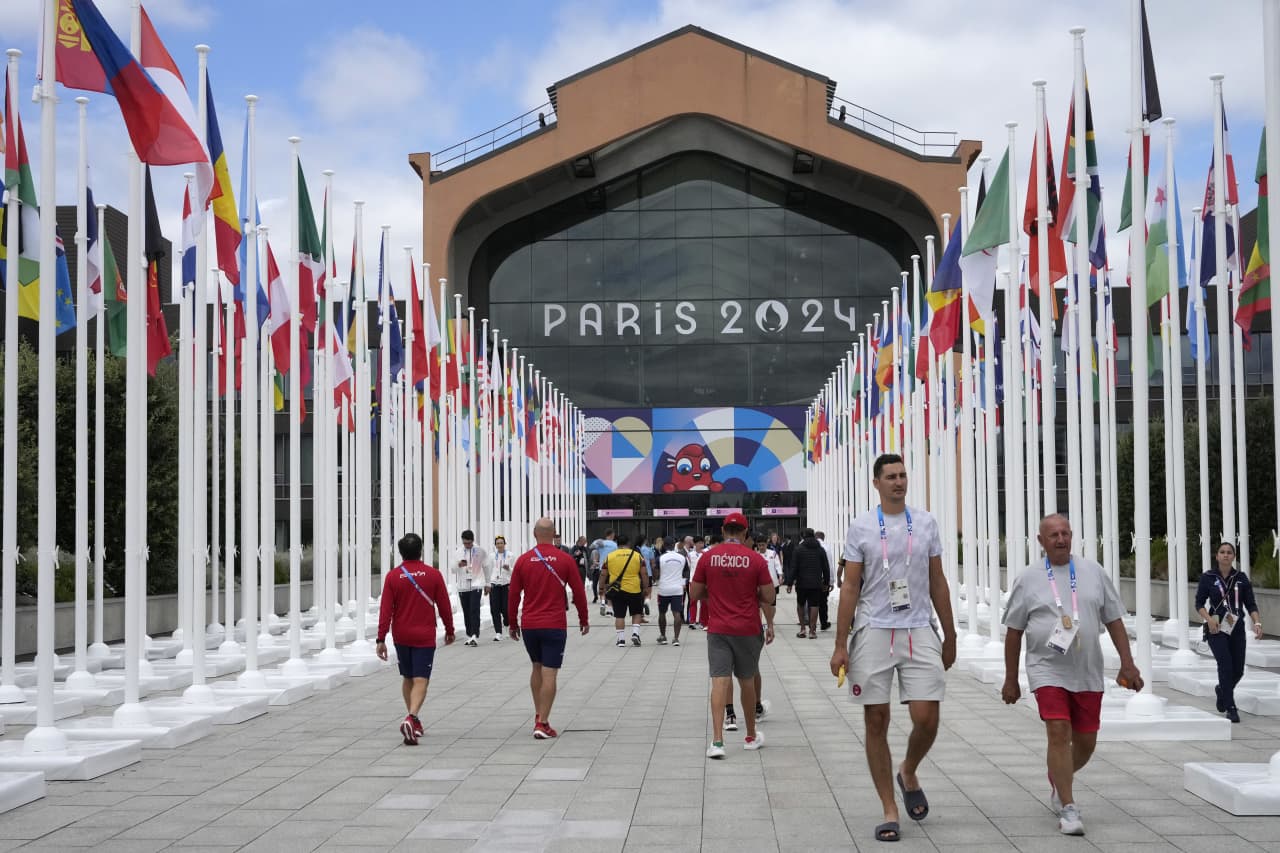

As Paris makes its final preparations for the Olympic games, its residents are busy with their own—packing their suitcases, confirming their reservations, and getting out of town.

Worried about the hordes of crowds and overall chaos the Olympics could bring, Parisians are fleeing the city in droves and inundating resort cities around the country. Hotels and holiday rentals in some of France’s most popular vacation destinations—from the French Riviera in the south to the beaches of Normandy in the north—say they are expecting massive crowds this year in advance of the Olympics. The games will run from July 26-Aug. 1.

“It’s already a major holiday season for us, and beyond that, we have the Olympics,” says Stéphane Personeni, general manager of the Lily of the Valley hotel in Saint Tropez. “People began booking early this year.”

Personeni’s hotel typically has no issues filling its rooms each summer—by May of each year, the luxury hotel typically finds itself completely booked out for the months of July and August. But this year, the 53-room hotel began filling up for summer reservations in February.

“We told our regular guests that everything—hotels, apartments, villas—are going to be hard to find this summer,” Personeni says. His neighbours around Saint Tropez say they’re similarly booked up.

As of March, the online marketplace Gens de Confiance (“Trusted People”), saw a 50% increase in reservations from Parisians seeking vacation rentals outside the capital during the Olympics.

Already, August is a popular vacation time for the French. With a minimum of five weeks of vacation mandated by law, many decide to take the entire month off, renting out villas in beachside destinations for longer periods.

But beyond the typical August travel, the Olympics are having a real impact, says Bertille Marchal, a spokesperson for Gens de Confiance.

“We’ve seen nearly three times more reservations for the dates of the Olympics than the following two weeks,” Marchal says. “The increase is definitely linked to the Olympic Games.”

Getty Images

According to the site, the most sought-out vacation destinations are Morbihan and Loire-Atlantique, a seaside region in the northwest; le Var, a coastal area within the southeast of France along the Côte d’Azur; and the island of Corsica in the Mediterranean.

Meanwhile, the Olympics haven’t necessarily been a boon to foreign tourism in the country. Many tourists who might have otherwise come to France are avoiding it this year in favour of other European capitals. In Paris, demand for stays at high-end hotels has collapsed, with bookings down 50% in July compared to last year, according to UMIH Prestige, which represents hotels charging at least €800 ($865) a night for rooms.

Earlier this year, high-end restaurants and concierges said the Olympics might even be an opportunity to score a hard-get-seat at the city’s fine dining.

In the Occitanie region in southwest France, the overall number of reservations this summer hasn’t changed much from last year, says Vincent Gare, president of the regional tourism committee there.

“But looking further at the numbers, we do see an increase in the clientele coming from the Paris region,” Gare told Le Figaro, noting that the increase in reservations has fallen directly on the dates of the Olympic games.

Michel Barré, a retiree living in Paris’s Le Marais neighbourhood, is one of those opting for the beach rather than the opening ceremony. In January, he booked a stay in Normandy for two weeks.

“Even though it’s a major European capital, Paris is still a small city—it’s a massive effort to host all of these events,” Barré says. “The Olympics are going to be a mess.”

More than anything, he just wants some calm after an event-filled summer in Paris, which just before the Olympics experienced the drama of a snap election called by Macron.

“It’s been a hectic summer here,” he says.

AFP via Getty Images

Parisians—Barré included—feel that the city, by over-catering to its tourists, is driving out many residents.

Parts of the Seine—usually one of the most popular summertime hangout spots —have been closed off for weeks as the city installs bleachers and Olympics signage. In certain neighbourhoods, residents will need to scan a QR code with police to access their own apartments. And from the Olympics to Sept. 8, Paris is nearly doubling the price of transit tickets from €2.15 to €4 per ride.

The city’s clear willingness to capitalise on its tourists has motivated some residents to do the same. In March, the number of active Airbnb listings in Paris reached an all-time high as hosts rushed to list their apartments. Listings grew 40% from the same time last year, according to the company.

With their regular clients taking off, Parisian restaurants and merchants are complaining that business is down.

“Are there any Parisians left in Paris?” Alaine Fontaine, president of the restaurant industry association, told the radio station Franceinfo on Sunday. “For the last three weeks, there haven’t been any here.”

Still, for all the talk of those leaving, there are plenty who have decided to stick around.

Jay Swanson, an American expat and YouTuber, can’t imagine leaving during the Olympics—he secured his tickets to see ping pong and volleyball last year. He’s also less concerned about the crowds and road closures than others, having just put together a series of videos explaining how to navigate Paris during the games.

“It’s been 100 years since the Games came to Paris; when else will we get a chance to host the world like this?” Swanson says. “So many Parisians are leaving and tourism is down, so not only will it be quiet but the only people left will be here for a party.”

This stylish family home combines a classic palette and finishes with a flexible floorplan

Just 55 minutes from Sydney, make this your creative getaway located in the majestic Hawkesbury region.