Business Schools Are Going All In on AI

American University, other top M.B.A. programs reorient courses around artificial intelligence; ‘It has eaten our world’

At the Wharton School this spring, Prof. Ethan Mollick assigned students the task of automating away part of their jobs.

Mollick tells his students at the University of Pennsylvania to expect to feel insecure about their own capabilities once they understand what artificial intelligence can do.

“You haven’t used AI until you’ve had an existential crisis,” he said. “You need three sleepless nights.”

Top business schools are pushing M.B.A. candidates and undergraduates to use artificial intelligence as a second brain. Students are eager for the instruction as employers increasingly hire talent with AI skills .

American University’s Kogod School of Business is putting an unusually high emphasis on AI, threading teaching on the technology through 20 new or adapted classes, from forensic accounting to marketing, which will roll out next school year. Professors this week started training on how to use and teach AI tools.

Understanding and using AI is now a foundational concept, much like learning to write or reason, said David Marchick, dean of Kogod.

“Every young person needs to know how to use AI in whatever they do,” he said of the decision to embed AI instruction into every part of the business school’s undergraduate core curriculum.

Marchick, who uses ChatGPT to prep presentations to alumni and professors, ordered a review of Kogod’s coursework in December after Brett Wilson, a venture capitalist with Swift Ventures, visited campus and told students that they wouldn’t lose jobs to AI, but rather to professionals who are more skilled in deploying it.

American’s new AI classwork will include text mining, predictive analytics and using ChatGPT to prepare for negotiations, whether navigating workplace conflict or advocating for a promotion. New courses include one on AI in human-resource management and a new business and entertainment class focused on AI, a core issue of last year’s Hollywood writers strike.

Officials and faculty at Columbia Business School and Duke University’s Fuqua School of Business say fluency in AI will be key to graduates’ success in the corporate world, allowing them to climb the ranks of management. Forty percent of prospective business-school students surveyed by the Graduate Management Admission Council said learning AI is essential to a graduate business degree—a jump from 29% in 2022.

Many of them are also anxious that their jobs could be replaced by generative AI. Much of entry-level work could be automated, the management-consulting group Oliver Wyman projected in a recent report. That means that future early-career jobs might require a more muscular skillset and more closely resemble first-level management roles .

Faster thinking

Business-school professors are now encouraging students to use generative AI as a tool, akin to a calculator for doing math.

M.B.A.s should be using AI to generate ideas quickly and comprehensively, according to Sheena Iyengar, a Columbia Business School professor who wrote “Think Bigger,” a book on innovation. But it’s still up to people to make good decisions and ask the technology the right questions.

“You still have to direct it, otherwise it will give you crap,” she said. “You cannot eliminate human judgment.”

One exercise that Iyengar walks her students through is using AI to generate business idea pitches from the automated perspectives of Tom Brady, Martha Stewart and Barack Obama. The assignment illustrates how ideas can be reframed for different audiences and based on different points of view.

Blake Bergeron, a 27-year-old M.B.A. student at Columbia, used generative AI to brainstorm new business ideas for a project last fall. One it returned was a travel service that recommends destinations based on a person’s social networks, pulling data from their friends’ posts. Bergeron’s team asked the AI to pressure-test the idea, coming up with pros and cons, and for potential business models.

Bergeron said he noticed pitfalls as he experimented. When his team asked the generative AI tool for ways to market the travel service, it spit out a group of very similar ideas. From there, Bergeron said, the students had to coax the tool to get creative, asking for one out-of-the-box idea at a time.

Professors say that through this instruction, they hope students learn where AI is currently weak. Mathematics and citations are two areas where mistakes abound. At Kogod this week, executives who were training professors in AI stressed that adopters of the technology needed to do a human review and edit all AI-generated content, including analysis, before sharing the materials.

Faster doing

When Robert Bray, who teaches operations management at Northwestern’s Kellogg School of Management, realised that ChatGPT could answer nearly every question in the textbook he uses for his data analytics course, he updated the syllabus. Last year, he started to focus on teaching coding using large-language models, which are trained on vast amounts of data to generate text and code. Enrolment jumped to 55 from 21 M.B.A. students, he said.

Before, engineers had an edge against business graduates because of their technical expertise, but now M.B.A.s can use AI to compete in that zone, Bray said.

He encourages his students to offload as much work as possible to AI, treating it like “a really proficient intern.”

Ben Morton, one of Bray’s students, is bullish on AI but knows he needs to be able to work without it. He did some coding with ChatGPT for class and wondered: If ChatGPT were down for a week, could he still get work done?

Learning to code with the help of generative AI sped up his development.

“I know so much more about programming than I did six months ago,” said Morton, 27. “Everyone’s capabilities are exponentially increasing.”

Several professors said they can teach more material with AI’s assistance. One said that because AI could solve his lab assignments, he no longer needed much of the class time for those activities. With the extra hours he has students present to their peers on AI innovations. Campus is where students should think through how to use AI responsibly, said Bill Boulding , dean of Duke’s Fuqua School.

“How do we embrace it? That is the right way to approach this—we can’t stop this,” he said. “It has eaten our world. It will eat everyone else’s world.”

Copyright 2020, Dow Jones & Company, Inc. All Rights Reserved Worldwide. LEARN MORE

Copyright 2020, Dow Jones & Company, Inc. All Rights Reserved Worldwide. LEARN MORE

This stylish family home combines a classic palette and finishes with a flexible floorplan

Just 55 minutes from Sydney, make this your creative getaway located in the majestic Hawkesbury region.

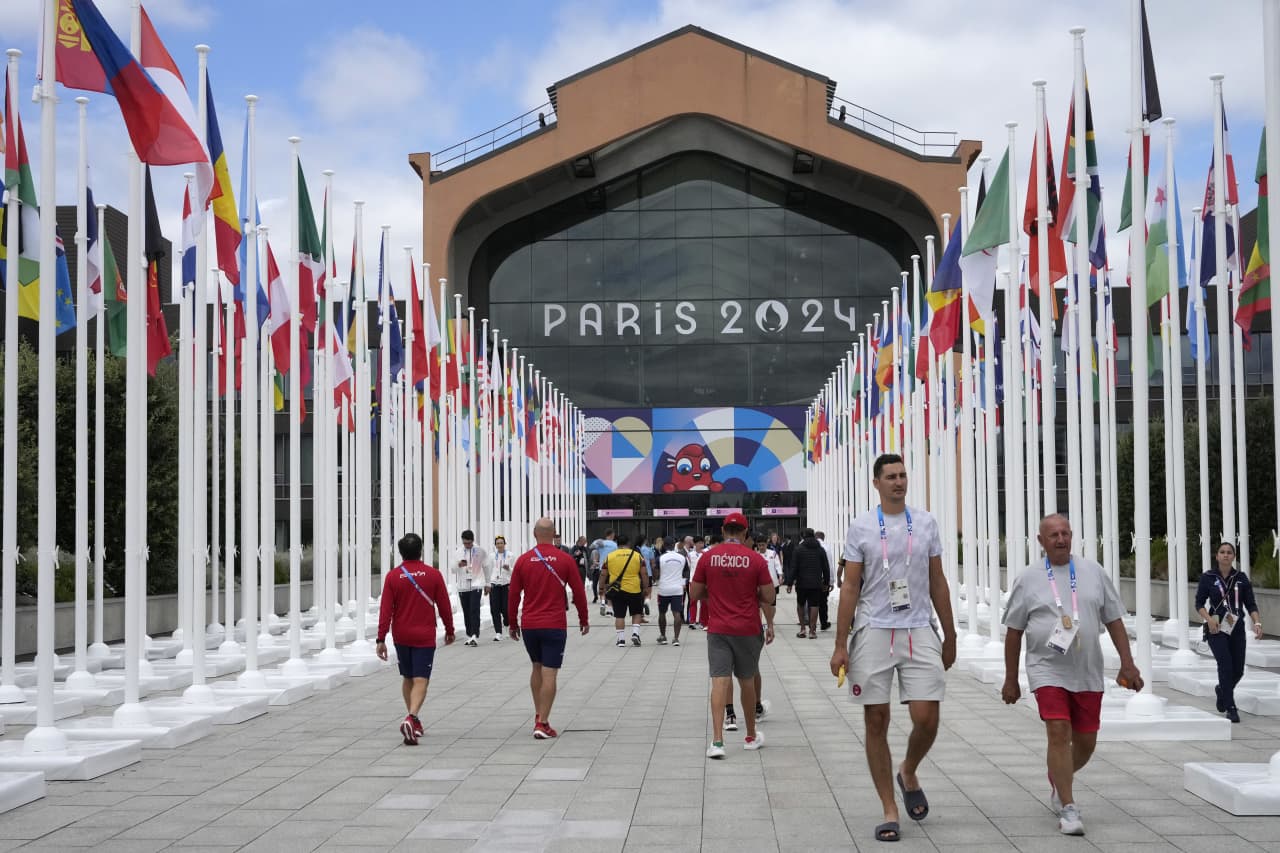

As Paris makes its final preparations for the Olympic games, its residents are busy with their own—packing their suitcases, confirming their reservations, and getting out of town.

Worried about the hordes of crowds and overall chaos the Olympics could bring, Parisians are fleeing the city in droves and inundating resort cities around the country. Hotels and holiday rentals in some of France’s most popular vacation destinations—from the French Riviera in the south to the beaches of Normandy in the north—say they are expecting massive crowds this year in advance of the Olympics. The games will run from July 26-Aug. 1.

“It’s already a major holiday season for us, and beyond that, we have the Olympics,” says Stéphane Personeni, general manager of the Lily of the Valley hotel in Saint Tropez. “People began booking early this year.”

Personeni’s hotel typically has no issues filling its rooms each summer—by May of each year, the luxury hotel typically finds itself completely booked out for the months of July and August. But this year, the 53-room hotel began filling up for summer reservations in February.

“We told our regular guests that everything—hotels, apartments, villas—are going to be hard to find this summer,” Personeni says. His neighbours around Saint Tropez say they’re similarly booked up.

As of March, the online marketplace Gens de Confiance (“Trusted People”), saw a 50% increase in reservations from Parisians seeking vacation rentals outside the capital during the Olympics.

Already, August is a popular vacation time for the French. With a minimum of five weeks of vacation mandated by law, many decide to take the entire month off, renting out villas in beachside destinations for longer periods.

But beyond the typical August travel, the Olympics are having a real impact, says Bertille Marchal, a spokesperson for Gens de Confiance.

“We’ve seen nearly three times more reservations for the dates of the Olympics than the following two weeks,” Marchal says. “The increase is definitely linked to the Olympic Games.”

Getty Images

According to the site, the most sought-out vacation destinations are Morbihan and Loire-Atlantique, a seaside region in the northwest; le Var, a coastal area within the southeast of France along the Côte d’Azur; and the island of Corsica in the Mediterranean.

Meanwhile, the Olympics haven’t necessarily been a boon to foreign tourism in the country. Many tourists who might have otherwise come to France are avoiding it this year in favour of other European capitals. In Paris, demand for stays at high-end hotels has collapsed, with bookings down 50% in July compared to last year, according to UMIH Prestige, which represents hotels charging at least €800 ($865) a night for rooms.

Earlier this year, high-end restaurants and concierges said the Olympics might even be an opportunity to score a hard-get-seat at the city’s fine dining.

In the Occitanie region in southwest France, the overall number of reservations this summer hasn’t changed much from last year, says Vincent Gare, president of the regional tourism committee there.

“But looking further at the numbers, we do see an increase in the clientele coming from the Paris region,” Gare told Le Figaro, noting that the increase in reservations has fallen directly on the dates of the Olympic games.

Michel Barré, a retiree living in Paris’s Le Marais neighbourhood, is one of those opting for the beach rather than the opening ceremony. In January, he booked a stay in Normandy for two weeks.

“Even though it’s a major European capital, Paris is still a small city—it’s a massive effort to host all of these events,” Barré says. “The Olympics are going to be a mess.”

More than anything, he just wants some calm after an event-filled summer in Paris, which just before the Olympics experienced the drama of a snap election called by Macron.

“It’s been a hectic summer here,” he says.

AFP via Getty Images

Parisians—Barré included—feel that the city, by over-catering to its tourists, is driving out many residents.

Parts of the Seine—usually one of the most popular summertime hangout spots —have been closed off for weeks as the city installs bleachers and Olympics signage. In certain neighbourhoods, residents will need to scan a QR code with police to access their own apartments. And from the Olympics to Sept. 8, Paris is nearly doubling the price of transit tickets from €2.15 to €4 per ride.

The city’s clear willingness to capitalise on its tourists has motivated some residents to do the same. In March, the number of active Airbnb listings in Paris reached an all-time high as hosts rushed to list their apartments. Listings grew 40% from the same time last year, according to the company.

With their regular clients taking off, Parisian restaurants and merchants are complaining that business is down.

“Are there any Parisians left in Paris?” Alaine Fontaine, president of the restaurant industry association, told the radio station Franceinfo on Sunday. “For the last three weeks, there haven’t been any here.”

Still, for all the talk of those leaving, there are plenty who have decided to stick around.

Jay Swanson, an American expat and YouTuber, can’t imagine leaving during the Olympics—he secured his tickets to see ping pong and volleyball last year. He’s also less concerned about the crowds and road closures than others, having just put together a series of videos explaining how to navigate Paris during the games.

“It’s been 100 years since the Games came to Paris; when else will we get a chance to host the world like this?” Swanson says. “So many Parisians are leaving and tourism is down, so not only will it be quiet but the only people left will be here for a party.”

This stylish family home combines a classic palette and finishes with a flexible floorplan

Just 55 minutes from Sydney, make this your creative getaway located in the majestic Hawkesbury region.